Here, we would like to take the opportunity to showcase some of our reference projects:

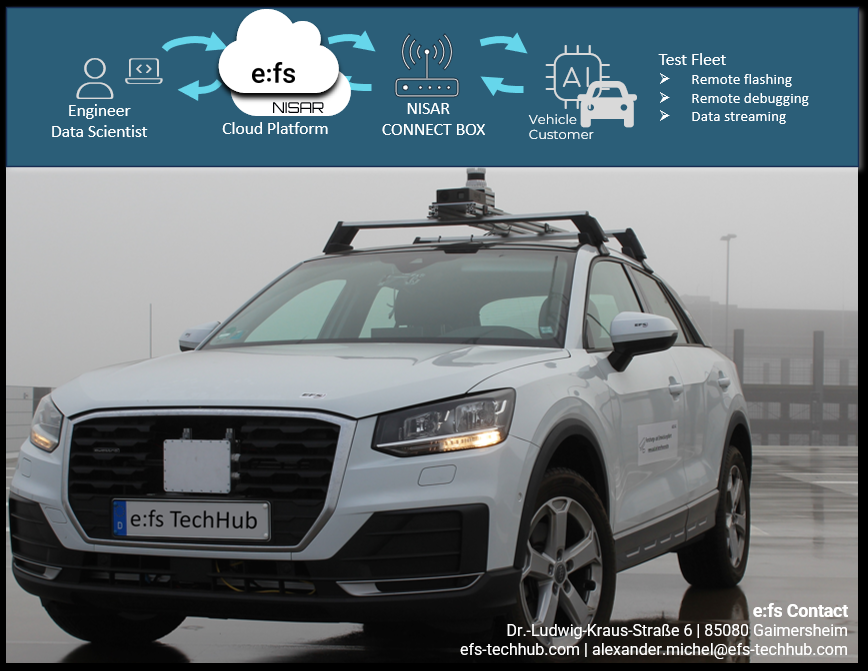

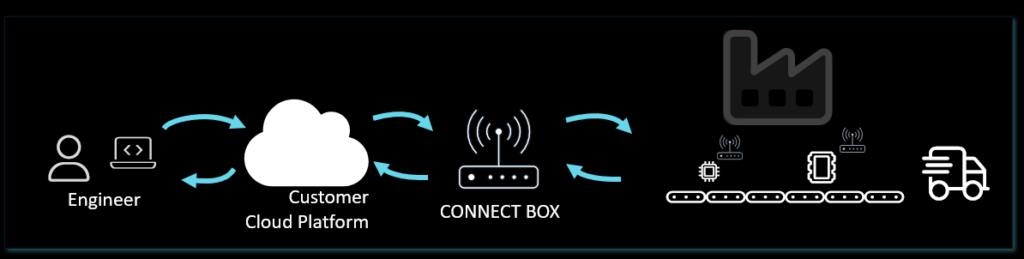

IoT connectivity, Cloud Platform, Function Development

NISAR and e:fs join forces:

Combining new ideas from the startup ecosystem with extensive experience in series development and validation we strive to change the way how automotive software is developed today.

– Test car for data recording, remote connectivity

– Cloud platform

– IoT connectivity for car-pc to cloud platform

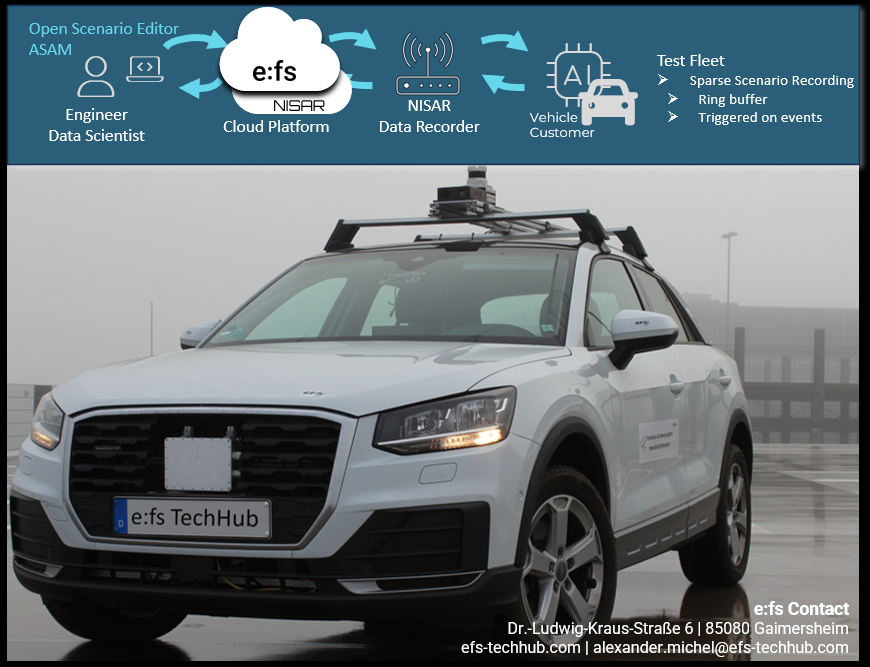

Traffic Scenario Recorder based on Ring-Buffer

NISAR and e:fs join forces:

Combining new ideas from the startup ecosystem with extensive experience in series development and validation we strive to change the way how automotive software is developed today.

-Sparse Scenario Recording

-Do not record hundreds of hours and km, just record what is relevant

-Store vehicle sensor and bus data in ring buffer

-Store ring buffer content based on triggers, i.e. sharp braking

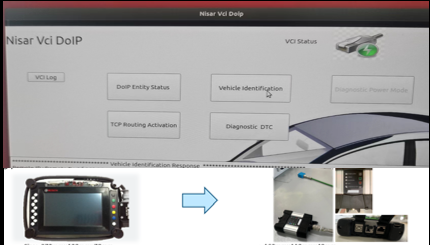

ECU remote monitoring & flashing on production line

Monitoring & flashing ECU already on the production line

Software for ECUs is increasing rapidly in size. And so is the flashing time needed at End-of-line flashing stations.

With our IoT connector the ECU is already flashed while on the line, saving time at EOL.

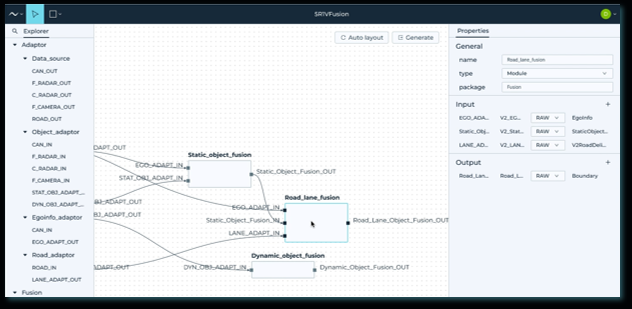

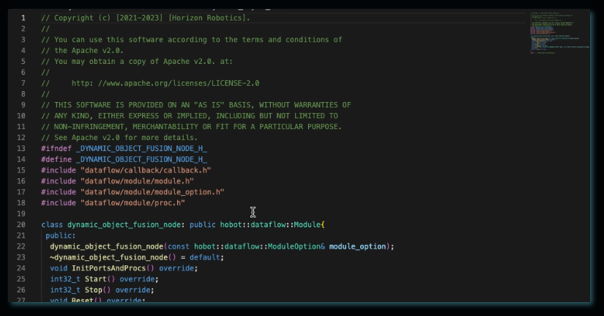

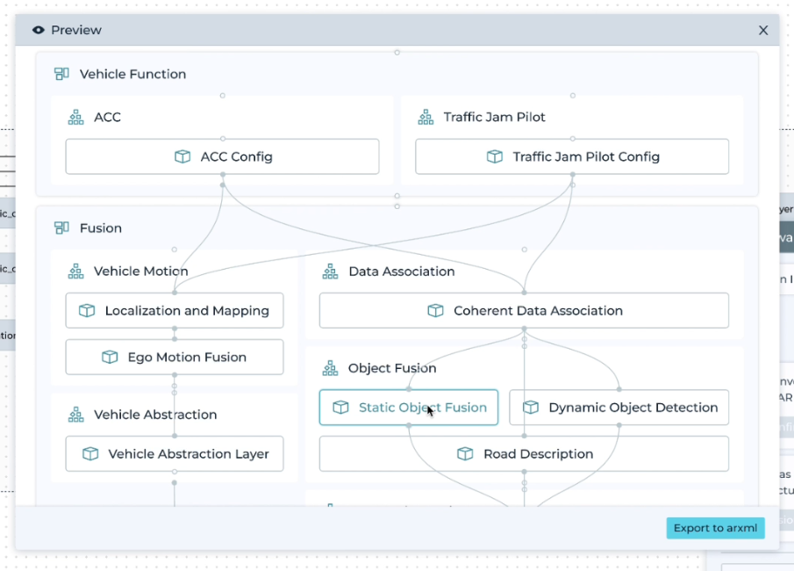

XDP Showcase with TogetherOS

Member of the Horizon Ecosystem

Code Generation for Horizon TogetherOS from Software Architecture i.e. for a camera fusion system.

Built into NISAR Architect Design Studio

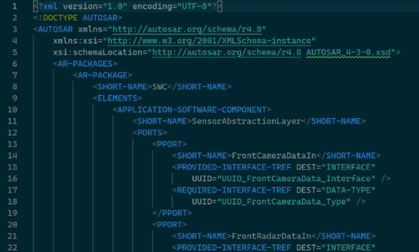

XDP Showcase with AUTOSAR

Phase 1:

Integrating Nisar ADS with ETAS AUTOSAR Toolchain, enabling the generation of AUTOSAR code from abstract architectures

Phase 2:

Enabling the bridge from pre-development in ROS to AUTOSAR via the abstract architecture

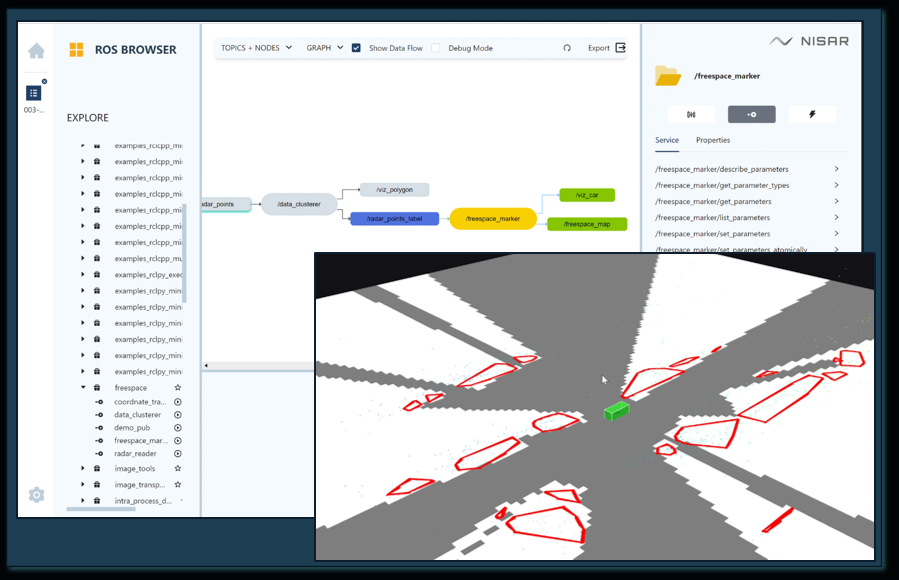

Multi Radar Sensor Fusion

Creation of a central 360° environmental model from several corner radars in ROS

Two variants:

1.Free-Space model using occupancy grid maps

2.Geometrical obstacle representation using bounding polygons

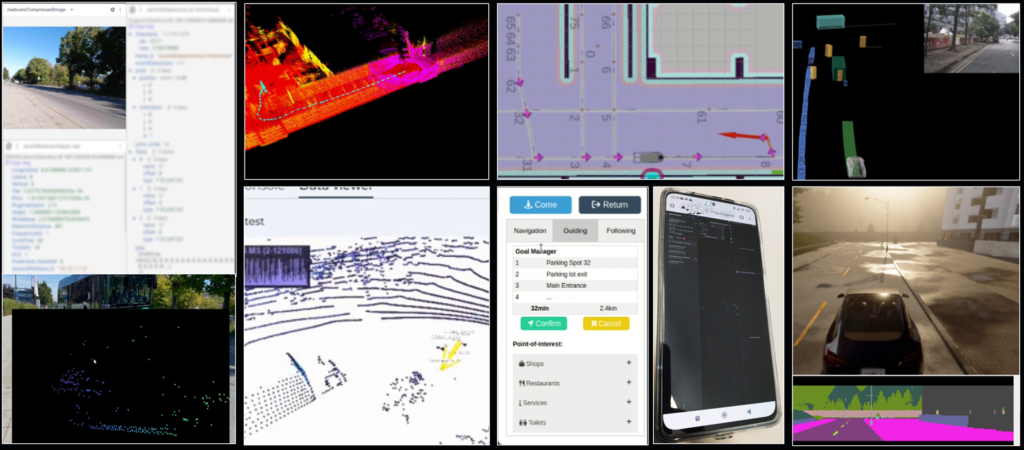

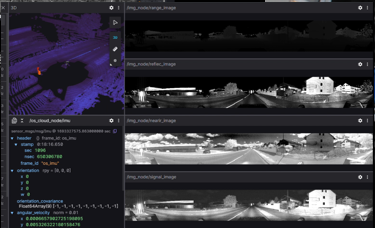

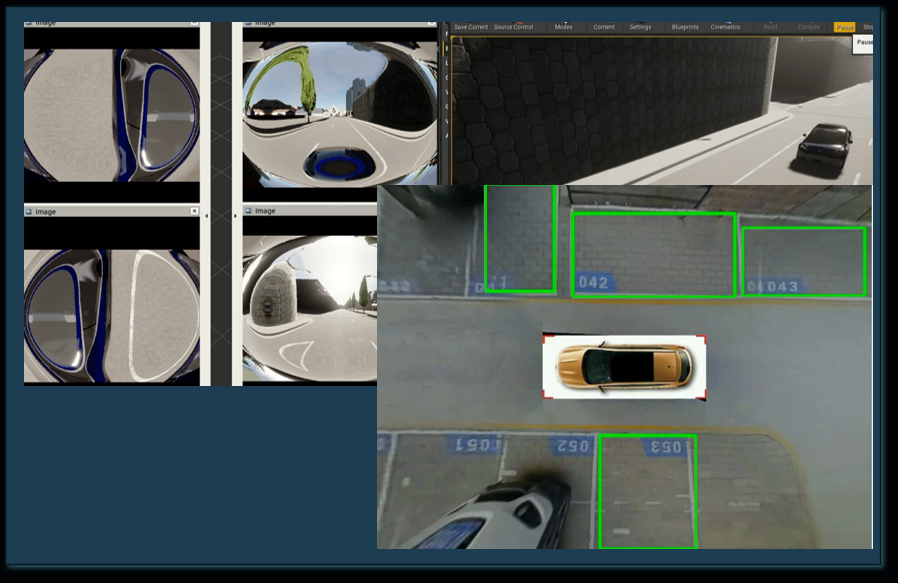

Surround View and Parking Assistant

Creation of a central 360° environmental model from several cameras

Three steps:

1. Bird-eye view for the driver by merging 6 cameras

2. Detecting of free and occupied parking spaces

3. Dubbin / Reed based trajectory planner

Simulation and real vehicle

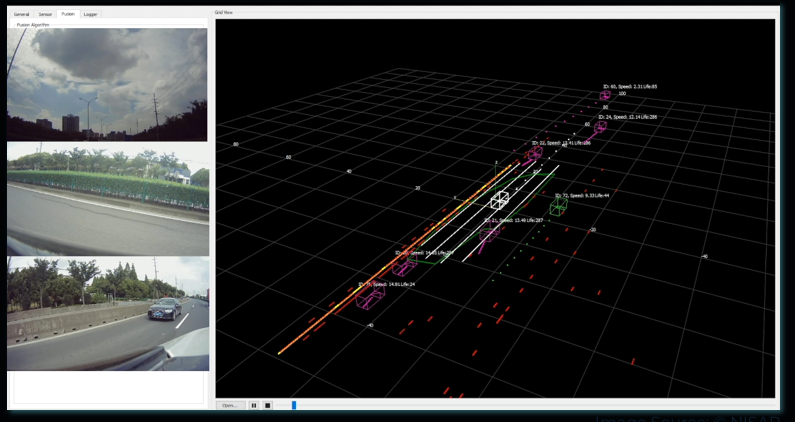

Radar-Camera-Fusion & Object-Tracking

Car equipped with radar and cameras, each with their own object tracking

• Fusion of the tracks

• Increased accuracy

• Increased robustness

• Tracking beyond the limits of the sensors’ fields of view

• Tracking of lanes and assignment of objects

• Planning the navigable space taking into account the objects’ own movement and prediction

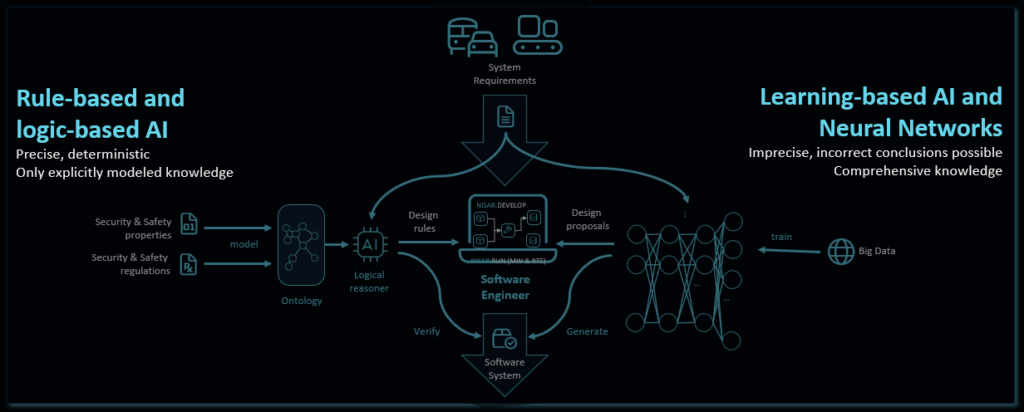

AI-based Cyber Security

AI-based design wizards to enable integrating Cyber Security already in early design phases while at the same time easing the task for Cyber Security Engineers

Published at AI.BAY conference, 2023, with TH Ingolstadt CARISSMA, D.Bayerl

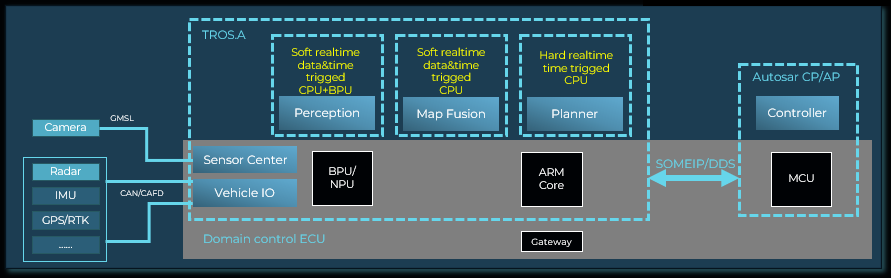

Our test vehicle in Shanghai

Test Vehicle Platform

– MG4 Mulan

Sensor Setup

– 7 Cameras – 2-8M Pixels

– 4 Fisheye cameras

– 3 LRR-Radars

Software Components integrated

– BEV Model for Perception

– HD Map Fusion

– Radar + Camera Object Fusion

– NoA Navigation on Autopilot

– Middleware: TROS, ROS and Autosar

Current Hardware Platforms

– HR J5 x 2 256 TOPs + Semidrive X9U

– HR J5 x 1 + NXP SR32G

– NVIDIA Orin + TC397

– HR J3 x 1 3 TOPs + Aurix

…additional impressions…